Hi Sachin,

It is a very simple question and it is what a kind of makes answering it very difficult (at least for me). I'll try to give an explanation using a simple model at the risk of experts here calling for banning me for oversimplifying concepts.

Let's assume that our chain consists of a DAC driving a power-amp which in turn drives a pair of full-range speakers (so no crossovers to worry about in this case

) and, that the DAC produces a max line level output voltage of 2V at full volume.

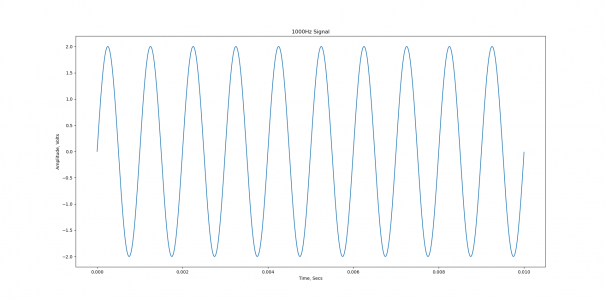

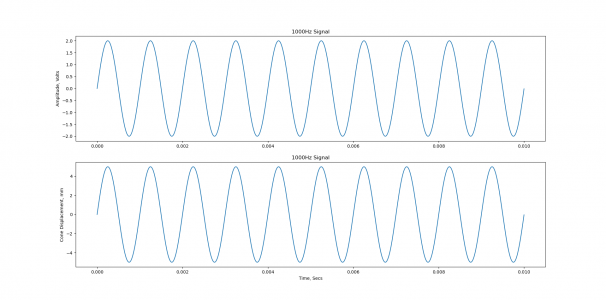

Now consider the case when the signal is a pure 1000Hz tone. This is how the output voltage of the DAC looks like, over time:

View attachment 71568

Note: We are going to consider only one channel in these examples.

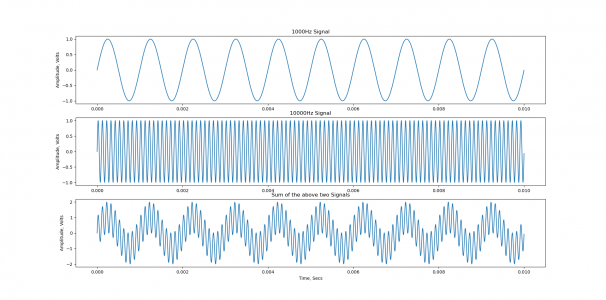

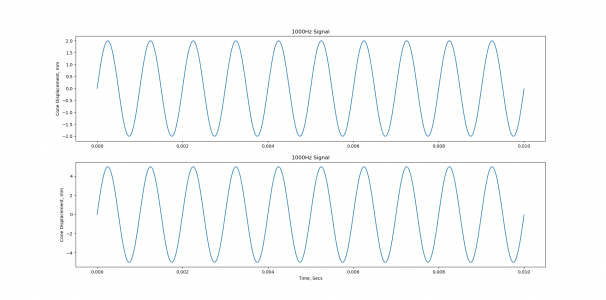

We would have come across these kind of waveforms before and nothing really interesting here. Now, consider the case when the signal consists of two tones: a 1000Hz tone and a 10000Hz tone. The first two plots illustrate how the DAC's output voltage, at full volume, vary over time when each tone is applied separately and the third plot illustrates the DAC's output voltage, at full volume, while playing the combined signal.

View attachment 71569

Note: Here I'm assuming that the amplitudes of the tones have been normalized to get max output voltage at full volume while recording the signal. To put it differently, each tone in this case is assumed to have a peak amplitude of 1V and the combined signal a peak of 1V + 1V = 2V.

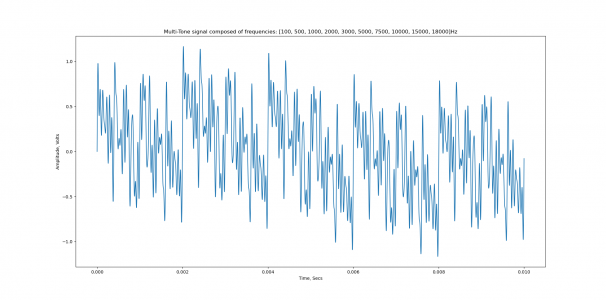

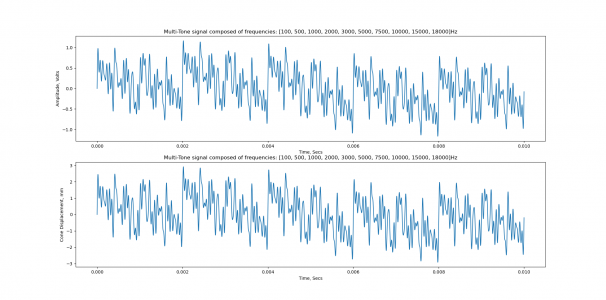

We can extend this further and consider a signal obtained by combining 10 tones. At full volume, the plot below illustrates how the DAC's output voltage varies over time:

View attachment 71570

The tones composing the signal have been mentioned in the plot's title. Would you have believed that 10 sine waves when combined can look so bad?

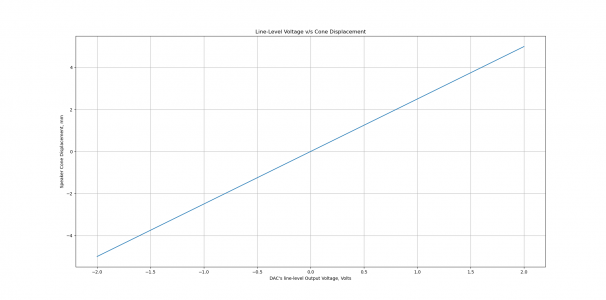

So far we have only seen how the line level output voltage of the DAC looks like. But, your question is regarding the speaker cone movement. In our example chain, the DAC drives the power-amp and the power-amp drives the speakers. This is where we are going to simplify concepts (a lot!)

Let us assume that a +ve voltage at the DAC's output results in a larger +ve voltage at the power-amp's output which in turn will push the speaker cone forward. Similarly, a -ve voltage at the DAC's output results in a larger -ve voltage at the power-amp's output which pushes the speaker cone backward. When the DAC's output voltage is zero, the power-amp's output voltage is also zero and there is no displacement in the speaker cone (as good as when the chain is powered-off).

By combing electrical, mechanical and acoustic engineering theory and based on complex equations, we may be able to predict (to some extent), what the cone displacement is going to be for a given tone frequency and amplitude. But, we are going to abstract all this using a simple linear equation (experts in these fields can tell how dumb I sound!):

1. A +2V line voltage at the DAC's output is going to displace the speaker cone by, say, +5mm ("+" indicates forward movement of the cone)

2. A -2V line voltage at the DAC's output is going to displace the speaker cone by, say, -5mm ("-" indicates backward movement of the cone)

3. 0V at the DAC's output is going to have no effect on the speaker cone

View attachment 71573

Note: I've taken the liberty (or atrocity?) to ignore the tone frequency in this model!

Now, it is just a matter of changing the scale and units of the above graphs, to get plots of cone displacement in mm over time, for a given line level voltage waveform.

First, the 1000Hz tone's case. The first plot below shows the DAC's output voltage over time and the second plot shows the speaker cone displacement in mm over time (based on our unrealistic, oversimplified, linear model, of course

):

View attachment 71574

Now, let's look at the case of the mult-tone signal.

View attachment 71572

In this case, you can see that the cone will have to often travel a lot of distance, forward and backward, very quickly. Practically, it is very difficult for a single driver to accomplish this. This is loosely why full range drivers have a limited bandwidth (usually limited at extreme ends of the audio spectrum) and we often choose to go for multi-driver speakers, as each driver can be optimized to handle only a certain range of frequencies.

Hope this was helpful!

With regards,

Sandeep Sasi

But I had to ask it nevertheless! There might be some other techno-novices like me who have wondered the same sometime and would benefit from your answers.

But I had to ask it nevertheless! There might be some other techno-novices like me who have wondered the same sometime and would benefit from your answers.