Let me test my understanding by taking a stab at an answer

.

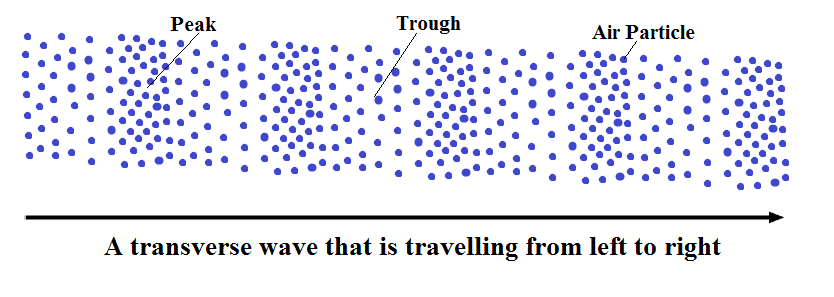

We do need dips, but these are nothing more than the variations in peak heights, all the way down to zero height. So if all the peak heights are captured, so are the dips.

And the wave is just a representation of the sound vibrations, using succeeding peak height information, and "how many peaks are present in a second" information. Thinking of the wave as more than that leads to thinking that there have to be data points for other parts of the "wave". There aren't any, in reality.

I am now pretty sure that the wave you see on an oscilloscope is drawn by the electronics in the instrument by just these two bits of information, and we end up thinking that there are data points for all of points on the lines shown that connect these information points.

For digital to be 100% truthful it has to carry all the information about the peak heights for as many times as they occur in a second. And sampling 40000 times a second allows this to be done for all the data points that exist for sound frequencies up to 20khz. The 44000 times is for a margin of safety.

The times when it does not happen is not because of a flaw in this reasoning, but because of engineering constraints that have to be overcome in converting this state of affairs to just as truthful electrical signals that reach the speakers.

Digressing again, my experience of modern digital audio equipment tells me that these constraints have now been overcome to the point that further progress isn't to be audibly heard in a well constructed listening test. And the solutions overcoming these have by now also found their way to cheap digital components.