I have explained 'upconversion', scaling, 'upscaling' to a certain extent in the following threads:

http://www.hifivision.com/television/2415-video-resolution-1080p-upscaling-1080p.html (Post 27)

http://www.hifivision.com/television/2160-samsung-sony-3.html

http://www.hifivision.com/surround-amplifiers-receivers/2680-ht-setup-future-proof-vfm.html

But I see that there are lots of confusion in many people's (including mine) mind about 'upconversion' and 'upscaling'. I also notice that many manufacturers are using these terms loosely giving wrong impressions to potential buyers. When you question a dealer he happily says yes to all your questions about upconversion/upscaling. 'Latest technologies, Sir', he enthuses. But when you start drilling down to specifics, he backs off and usually says he will ask his technical person to call back. Sheeesh, man!! So all this time, who was I speaking to, the roadside vegetable vendor?

Jokes apart, what is the difference between 'upconversion' and 'upscaling'?

Actually quite a lot. Upconversion is a very broad term that could include anything from changing one connection format to another (called transcoding), to deinterlacing or conversion of interlaced video to progressive video, to scaling of resolution. (Up)scaling, on the other hand, specifically refers to the scaling of resolution of a video signal. Let us look at these one by one.

TRANSCODING

Transcoding refers to the changing of one signal format to another, such as composite-video to S-or component-video.

Transcoding has been there for a long time, and literally every TV has been doing it, albeit without our knowledge. A video signal is usually shot using a RGB camera, converted to component for transmission, and re-converted by our TV for display in RGB. Why is this done? Let us see.

RGB represents the three primary colors - Red, Blue, and Green. These colors are mixed in various proportions to give us all the other colors. The strange thing is RGB is excellent for shades of grey, black, and white, but very inefficient for other colors. In the early years of TV transmission when we were switching over from B&W to color, engineers had to figure out a way to attach the color variation signals without disturbing the B&W signals. They phase modulated the color variations signals into a sub carrier, creating chrominance signals. This sub carrier works at 3.50 megahertz on a standard 4.2MHz B&W signal. These signals together are what we know as composite signal. This is further complicated by frequency modulation of the audio at 4.5MHz, mixing it with the other two signals, and amplitude modulating the composite signal into a RF carrier for transmission. When the TV receives it, it naturally converts all this and splits the signals apart to deliver the video and sound for you.

Component-video has a saner solution. This consists of a full bandwidth B&W or luminance signals, and 2 narrower band color variation signals. These signals are created by subtracting the luminance signal for the red and blue signals. At the receiving end, the TV have matching color decoders that add and subtract the three signals in the right proportions to recreate your RGB signal.

Luminance is designated as 'Y', Color variations are thus designated as Y-R and Y-B. These are abbreviated to Cr and Cb for digital and Pr and Pb for analogue. Digital component video is thus called YCrCB, while analogue component is called YPrPb. Component video is more efficient for transmission and for storage.

And there's where we get to the processes that led to the other types of analogue video connections we have today and eventually to video transcoding in A/V receivers and preamplifiers.

Each of these formats, both for radio transmission, and for tapes or DVD have some sacrifices. So engineers are looking for ways to avoid these sacrifices. First we sent audio and composite-video through separate connections instead of keeping them together. Then we have S-video, or Y/C, connection, which keeps the luminance and chrominance separate instead of combining them into a composite signal. DVD introduced digital component formats that had to be converted to analogue component. Finally we have the players, STBs, HDTV tuners and the TV all talking in digital for the transmission part through HDMI.

An AV receiver with component video upconversion will thus transcode composite video to S-Video, and S-Video to component video. All analogue signals will thus be available as component output. HDMI upconversion takes this one step further. Analogue signals including S-Video, and composite video, will be converted to digital using ADCs to create digital component video and this is outputted through the HDMI port.

The Transcoding part of upconversion is only a convenience, as the signals cannot be improved in terms of performance.

DEINTERLACING

In a film, each still picture fills the entire frame. This is called progressive source. This means that the frame rate is the number of individual full pictures. In the came of film this is exactly 24. The frame rate is the number of frames displayed in an second. The human eye needs 16fps, but for some reason Hollywood has adopted 24 as the norm.

Till some time ago we used to see TV using what is called a cathode ray tube (CRT). This is an analogue device. In all CRT monitors, the image is painted on the screen by an electron beam that scans from one side of the display to the other drawing thin lines. This scan is used to display the transitions in color, intensity and pattern, and each complete pass of the electron gun is called a FIELD. Analogue TV uses a process that relies on the brain's ability to integrate gradual transitions in pattern that the eye sees as the image is painted on the screen. Each picture or frame on a television screen is composed of 525 lines, numbered from 1 to 525. During the first phase of screen drawing, even-numbered lines are drawn - 2,4,6,8 and so on. During the next phase, the odd lines are drawn 1,3,5,7 and so on. The eye integrates the two images to create a single image. An example of this is shown below. The fields are said to be interleaved together or interlaced. A frame or complete picture consists of two fields.

Like with film, each picture is from a different moment in time. Unlike film, the rate of individual pictures, or fields, is twice the frame rate.

Though modern television are not made this way any more, and modern LCD and Plasma TVs can display a full frame, remember the TV production and broadcast systems have been designed around the FIELD concept and is entrenched around the world.

While film has only progressive frames, and analogue video only interlaced fields, digital video may have either or both. In the digital domain, displaying progressively is a property separate from the contents of the frame. Although it's possible to encode and store interlaced video as a stream of fields, most interlaced video is encoded in pairs in the form of frames. As a rule, frame based interlaced encoding is less efficient than progressive encoding. This means that files with comparable bitrates will have lower quality if they contain interlaced video.

Interlacing was introduced as it was efficient for transmission within the available bandwidth. But if you take a standard analogue video signal (now called 480) and see it interlaced (480i) and using progressive scanning (480p), the progressive scanned version will look smoother. The 480 is the number of active scan lines, and the "i" or "p" the scanning method.

Modern TVs do not use scanning lines. Instead they can flash a complete frame at a time. What used to be scan lines on a CRT TV is now pixel rows. Thus, given the fact that the modern TV is capable of displaying a complete frame, conversion of interlaced pictures to progressive one is more a necessity than any performance improvement. It is better to watch a video at a lesser progressive resolution than at a higher interlaced one. In other word, for a modern digital TV, a 720P is better than an 1080i.

Conversion from interlaced to progressive is not as easy as it seems.

When TV cameras shoot in interlaced formats, the frames are shot sequentially with the second frame acquired a sixtieth of a second later. If the same speed and time difference is not maintained during interlacing, what are called 'jaggies' will appear. Fardoudja excelled in this with their DCDi technology that incorporated sophisticated motion-compensation techniques.

With material that is shot on film, there is different problem. A film that has progressive scan at 24 frames per second has to be converted to interlaced at 30fps for NTSC and 25fps for PAL.

To do this in NTSC, each frame is split into two fields and arranged as such. For example, let us assume we have four frame - A. B. C and D. This will become A1 A2, B1 B2, C1 C3, D1 D2 in interlaced format. To handle the difference in frame rates, it is possible to play the periodically selected frame twice. But this screws up the sound sync. The solution is what is called 2:3 pull down.

Since the frame rates between a film and NTSC is large, at least in the case of NTSC, the difference is rather obvious to the eye and ear. The solution is to periodically play a selected frame twice. The difference in frame rates can be corrected by showing every 4th frame of film twice, although this does require the sound to be handled separately to avoid "syncing/skipping" effects. A more convincing technique is to use "2:3 pull down", which turns every other frame of the film into three fields of video, which results in a much smoother display. Thus, taking our example, you will have A1 A2, B1 B2 B2, C1 C2, D1 D2 D2. If this is not handled properly, you will end up with what we know as 'combing'.

Now if you have a deinterlacer in your DVD Player, AVR, and TV which one do you choose? If you are watching a TV signal, the best is to allow the TV to upconvert with it's own de-interlacer. If you are watching a DVD, then ensure that the player has deinterlacing chip from a well known company such as Fardoudja, Silicon Image, Silicon Optix, or Gennum. Deinterlacing in a receiver or preamp will seldom make sense unless it is also scaling the image.

SCALING

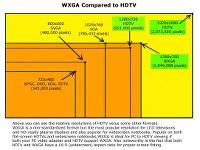

Regular modern digital TVs called HDTVs can accommodate four resolutions -

481i Standard Resolution (SD) for conventional analogue videos

480p for progressive scan DVD DVD playback

720P for wide screen HD format

1080i for wide screen high definition format

HDTVs can take in inputs in any scan formats with both 4:3 and 16:9 aspect ratios for SD signals. HD Signals are only in 16:9.

Full HDTVs go upto 1080p progressively scanned images.

Though it is possible to design a CRT that can handle all these, it has become cheaper to manufacture plasmas and LCD, and to convert some formats. Even rear projection and well as regular projectors use this approach.

In LCD and plasmas, all incoming signals have to be first de-interlaced and converted to progressive scan that exactly matches the pixel array matrix. On the other hand, CRT HDTVs work only at 480p or 1080i and all incoming signals are converted to one of these. This means 480i gets converted to 480p and 720p to 1080i.

When a incoming signal is converted to a resolution higher than it's own, this is called scaling or upscaling. The scaler interpolates between the pixels to create new ones that are mathematically generated. Some of the better scalers not only generate new pixels in the current frame, they also handle the pixel generation with reference to preceding and succeeding frames to make motion smoother.

All scaling need not be upconversion. If you send a 1080p signal to a TV that has only 720p, the scaler has to work to reduce the resolution. This is called decimation.

Cheers

http://www.hifivision.com/television/2415-video-resolution-1080p-upscaling-1080p.html (Post 27)

http://www.hifivision.com/television/2160-samsung-sony-3.html

http://www.hifivision.com/surround-amplifiers-receivers/2680-ht-setup-future-proof-vfm.html

But I see that there are lots of confusion in many people's (including mine) mind about 'upconversion' and 'upscaling'. I also notice that many manufacturers are using these terms loosely giving wrong impressions to potential buyers. When you question a dealer he happily says yes to all your questions about upconversion/upscaling. 'Latest technologies, Sir', he enthuses. But when you start drilling down to specifics, he backs off and usually says he will ask his technical person to call back. Sheeesh, man!! So all this time, who was I speaking to, the roadside vegetable vendor?

Jokes apart, what is the difference between 'upconversion' and 'upscaling'?

Actually quite a lot. Upconversion is a very broad term that could include anything from changing one connection format to another (called transcoding), to deinterlacing or conversion of interlaced video to progressive video, to scaling of resolution. (Up)scaling, on the other hand, specifically refers to the scaling of resolution of a video signal. Let us look at these one by one.

TRANSCODING

Transcoding refers to the changing of one signal format to another, such as composite-video to S-or component-video.

Transcoding has been there for a long time, and literally every TV has been doing it, albeit without our knowledge. A video signal is usually shot using a RGB camera, converted to component for transmission, and re-converted by our TV for display in RGB. Why is this done? Let us see.

RGB represents the three primary colors - Red, Blue, and Green. These colors are mixed in various proportions to give us all the other colors. The strange thing is RGB is excellent for shades of grey, black, and white, but very inefficient for other colors. In the early years of TV transmission when we were switching over from B&W to color, engineers had to figure out a way to attach the color variation signals without disturbing the B&W signals. They phase modulated the color variations signals into a sub carrier, creating chrominance signals. This sub carrier works at 3.50 megahertz on a standard 4.2MHz B&W signal. These signals together are what we know as composite signal. This is further complicated by frequency modulation of the audio at 4.5MHz, mixing it with the other two signals, and amplitude modulating the composite signal into a RF carrier for transmission. When the TV receives it, it naturally converts all this and splits the signals apart to deliver the video and sound for you.

Component-video has a saner solution. This consists of a full bandwidth B&W or luminance signals, and 2 narrower band color variation signals. These signals are created by subtracting the luminance signal for the red and blue signals. At the receiving end, the TV have matching color decoders that add and subtract the three signals in the right proportions to recreate your RGB signal.

Luminance is designated as 'Y', Color variations are thus designated as Y-R and Y-B. These are abbreviated to Cr and Cb for digital and Pr and Pb for analogue. Digital component video is thus called YCrCB, while analogue component is called YPrPb. Component video is more efficient for transmission and for storage.

And there's where we get to the processes that led to the other types of analogue video connections we have today and eventually to video transcoding in A/V receivers and preamplifiers.

Each of these formats, both for radio transmission, and for tapes or DVD have some sacrifices. So engineers are looking for ways to avoid these sacrifices. First we sent audio and composite-video through separate connections instead of keeping them together. Then we have S-video, or Y/C, connection, which keeps the luminance and chrominance separate instead of combining them into a composite signal. DVD introduced digital component formats that had to be converted to analogue component. Finally we have the players, STBs, HDTV tuners and the TV all talking in digital for the transmission part through HDMI.

An AV receiver with component video upconversion will thus transcode composite video to S-Video, and S-Video to component video. All analogue signals will thus be available as component output. HDMI upconversion takes this one step further. Analogue signals including S-Video, and composite video, will be converted to digital using ADCs to create digital component video and this is outputted through the HDMI port.

The Transcoding part of upconversion is only a convenience, as the signals cannot be improved in terms of performance.

DEINTERLACING

In a film, each still picture fills the entire frame. This is called progressive source. This means that the frame rate is the number of individual full pictures. In the came of film this is exactly 24. The frame rate is the number of frames displayed in an second. The human eye needs 16fps, but for some reason Hollywood has adopted 24 as the norm.

Till some time ago we used to see TV using what is called a cathode ray tube (CRT). This is an analogue device. In all CRT monitors, the image is painted on the screen by an electron beam that scans from one side of the display to the other drawing thin lines. This scan is used to display the transitions in color, intensity and pattern, and each complete pass of the electron gun is called a FIELD. Analogue TV uses a process that relies on the brain's ability to integrate gradual transitions in pattern that the eye sees as the image is painted on the screen. Each picture or frame on a television screen is composed of 525 lines, numbered from 1 to 525. During the first phase of screen drawing, even-numbered lines are drawn - 2,4,6,8 and so on. During the next phase, the odd lines are drawn 1,3,5,7 and so on. The eye integrates the two images to create a single image. An example of this is shown below. The fields are said to be interleaved together or interlaced. A frame or complete picture consists of two fields.

Like with film, each picture is from a different moment in time. Unlike film, the rate of individual pictures, or fields, is twice the frame rate.

Though modern television are not made this way any more, and modern LCD and Plasma TVs can display a full frame, remember the TV production and broadcast systems have been designed around the FIELD concept and is entrenched around the world.

While film has only progressive frames, and analogue video only interlaced fields, digital video may have either or both. In the digital domain, displaying progressively is a property separate from the contents of the frame. Although it's possible to encode and store interlaced video as a stream of fields, most interlaced video is encoded in pairs in the form of frames. As a rule, frame based interlaced encoding is less efficient than progressive encoding. This means that files with comparable bitrates will have lower quality if they contain interlaced video.

Interlacing was introduced as it was efficient for transmission within the available bandwidth. But if you take a standard analogue video signal (now called 480) and see it interlaced (480i) and using progressive scanning (480p), the progressive scanned version will look smoother. The 480 is the number of active scan lines, and the "i" or "p" the scanning method.

Modern TVs do not use scanning lines. Instead they can flash a complete frame at a time. What used to be scan lines on a CRT TV is now pixel rows. Thus, given the fact that the modern TV is capable of displaying a complete frame, conversion of interlaced pictures to progressive one is more a necessity than any performance improvement. It is better to watch a video at a lesser progressive resolution than at a higher interlaced one. In other word, for a modern digital TV, a 720P is better than an 1080i.

Conversion from interlaced to progressive is not as easy as it seems.

When TV cameras shoot in interlaced formats, the frames are shot sequentially with the second frame acquired a sixtieth of a second later. If the same speed and time difference is not maintained during interlacing, what are called 'jaggies' will appear. Fardoudja excelled in this with their DCDi technology that incorporated sophisticated motion-compensation techniques.

With material that is shot on film, there is different problem. A film that has progressive scan at 24 frames per second has to be converted to interlaced at 30fps for NTSC and 25fps for PAL.

To do this in NTSC, each frame is split into two fields and arranged as such. For example, let us assume we have four frame - A. B. C and D. This will become A1 A2, B1 B2, C1 C3, D1 D2 in interlaced format. To handle the difference in frame rates, it is possible to play the periodically selected frame twice. But this screws up the sound sync. The solution is what is called 2:3 pull down.

Since the frame rates between a film and NTSC is large, at least in the case of NTSC, the difference is rather obvious to the eye and ear. The solution is to periodically play a selected frame twice. The difference in frame rates can be corrected by showing every 4th frame of film twice, although this does require the sound to be handled separately to avoid "syncing/skipping" effects. A more convincing technique is to use "2:3 pull down", which turns every other frame of the film into three fields of video, which results in a much smoother display. Thus, taking our example, you will have A1 A2, B1 B2 B2, C1 C2, D1 D2 D2. If this is not handled properly, you will end up with what we know as 'combing'.

Now if you have a deinterlacer in your DVD Player, AVR, and TV which one do you choose? If you are watching a TV signal, the best is to allow the TV to upconvert with it's own de-interlacer. If you are watching a DVD, then ensure that the player has deinterlacing chip from a well known company such as Fardoudja, Silicon Image, Silicon Optix, or Gennum. Deinterlacing in a receiver or preamp will seldom make sense unless it is also scaling the image.

SCALING

Regular modern digital TVs called HDTVs can accommodate four resolutions -

481i Standard Resolution (SD) for conventional analogue videos

480p for progressive scan DVD DVD playback

720P for wide screen HD format

1080i for wide screen high definition format

HDTVs can take in inputs in any scan formats with both 4:3 and 16:9 aspect ratios for SD signals. HD Signals are only in 16:9.

Full HDTVs go upto 1080p progressively scanned images.

Though it is possible to design a CRT that can handle all these, it has become cheaper to manufacture plasmas and LCD, and to convert some formats. Even rear projection and well as regular projectors use this approach.

In LCD and plasmas, all incoming signals have to be first de-interlaced and converted to progressive scan that exactly matches the pixel array matrix. On the other hand, CRT HDTVs work only at 480p or 1080i and all incoming signals are converted to one of these. This means 480i gets converted to 480p and 720p to 1080i.

When a incoming signal is converted to a resolution higher than it's own, this is called scaling or upscaling. The scaler interpolates between the pixels to create new ones that are mathematically generated. Some of the better scalers not only generate new pixels in the current frame, they also handle the pixel generation with reference to preceding and succeeding frames to make motion smoother.

All scaling need not be upconversion. If you send a 1080p signal to a TV that has only 720p, the scaler has to work to reduce the resolution. This is called decimation.

Cheers

Last edited: